Rolling Deployments

Objective

Create a service deployment that automatically updates with a newly built image for each commit pushed to your git repository.

Prerequisites

Complete: CI / CD Getting Started Guide

This guide assumes that you already have application code and a corresponding Dockerfile committed and pushed to your version control system.

Background

A common practice in software development is to have an environment such as development that is running the latest code on the primary integration branch of your git repository (e.g., main). We will demonstrate how to easily accomplish this using the Panfactum CI / CD system.

In the below walkthrough, we will be referencing en example service. This is for reference only. You will not have access to the code or images used by this module, so you must create your own application code and IaC; you cannot simply copy our modules verbatim.

Build and Push Image from Dockerfile

First, you need to build and push an image based on the Dockerfile in your repository. We provide a submodule that will create a WorkflowTemplate that does this for you: wf_dockerfile_build.

Add this submodule to your CI / CD module that you created in the Getting Started guide. As an example of how this might look:

module "demo_service_builder" { source = "${var.pf_module_source}wf_dockerfile_build${var.pf_module_ref}"

name = "service-builder" namespace = local.namespace

// The git repository where the source code and Dockerfile live code_repo = "github.com/example-org/service.git"

// The AWS ECR repo where the built images will be pushed image_repo = "demo-service"}Note that the images generated by the Workflow are tagged with the commit hash that was checked out for the build.

Before proceeding, ensure you have used the WorkflowTemplate to successfully create a build of your repo’s Dockerfile.

It is possible that you might need to customize the module further if you are using a more complex repository setup like a monorepo. See the wf_dockerfile_build module for additional documentation.

Deploy the Image

Next, you must deploy your image using IaC. Here is an example of a service’s IaC as well as the terragrunt.hcl that might deploy it:

include "panfactum" { path = find_in_parent_folders("panfactum.hcl") expose = true}

terraform { // Notice that this is `source`, not `pf_stack_source`. // `source` is used for first-party IaC modules. source = include.panfactum.locals.source}

dependency "ingress" { config_path = "../kube_ingress_nginx" skip_outputs = true}

inputs = { domain = "api.example.com" image_version = run_cmd( "--terragrunt-quiet", "pf-get-commit-hash", "--ref=main", "--repo=https://github.com/example-org/service" )}terraform { required_providers { pf = { source = "panfactum/pf" } }}

locals { name = "demo-service" namespace = module.namespace.namespace port = 3000}

module "namespace" { source = "${var.pf_module_source}kube_namespace${var.pf_module_ref}" namespace = local.name}

module "database" { source = "${var.pf_module_source}kube_pg_cluster${var.pf_module_ref}"

pg_cluster_namespace = local.namespace aws_iam_ip_allow_list = []

pg_initial_storage_gb = 5 # for the purposes of the demo pg_max_connections = 20 # for the purposes of the demo pg_instances = 2 # for the purposes of the demo burstable_nodes_enabled = true # for the purposes of the demo

pull_through_cache_enabled = var.pull_through_cache_enabled monitoring_enabled = var.monitoring_enabled panfactum_scheduler_enabled = var.panfactum_scheduler_enabled instance_type_anti_affinity_required = var.enhanced_ha_enabled}

module "demo_service" { source = "${var.pf_module_source}kube_deployment${var.pf_module_ref}" namespace = module.namespace.namespace name = local.name

replicas = 2

common_env = { PORT = local.port DB_HOST = module.database.pooler_rw_service_name DB_PORT = module.database.pooler_rw_service_port DB_NAME = var.db_name DB_SCHEMA = var.db_schema TOKEN_VALIDATION_URL = var.token_validation_url }

common_env_from_secrets = { DB_USER = { secret_name = module.database.superuser_creds_secret key = "username" }

DB_PASSWORD = { secret_name = module.database.superuser_creds_secret key = "password" } }

tmp_directories = { "cache" = { mount_path = "/tmp" size_mb = 100 } }

containers = [ { name = local.name // Should be the account / region where the CICD builder is running image_registry = "12345678912.dkr.ecr.us-east-2.amazonaws.com" image_repository = local.name image_tag = var.image_version command = ["gunicorn", "--bind", "0.0.0.0:${local.port}", "src.app:app"] liveness_probe_type = "HTTP" liveness_probe_port = local.port liveness_probe_route = var.healthcheck_route minimum_memory = 100 ports = { http ={ port = local.port } } } ]

depends_on = [module.database]}

module "ingress" { source = "${var.pf_module_source}kube_ingress${var.pf_module_ref}"

name = local.name namespace = local.namespace

domains = [var.domain] ingress_configs = [{ path_prefix = "/" remove_prefix = true service = local.name service_port = local.port }]

cdn_mode_enabled = false cors_enabled = true cross_origin_embedder_policy = "credentialless" csp_enabled = true cross_origin_isolation_enabled = true rate_limiting_enabled = true permissions_policy_enabled = true

depends_on = [module.demo_service]}variable "domain" { description = "A list of domains from which the ingress will serve traffic" type = string}

variable "image_version" { description = "The version of the demo user service image to deploy" type = string}

variable "healthcheck_route" { description = "The route to use for the healthcheck" type = string}

variable "db_name" { description = "The name of the database" type = string}

variable "db_schema" { description = "The schema of the database" type = string}

variable "token_validation_url" { description = "The URL to use for token validation" type = string}

variable "pull_through_cache_enabled" { description = "Whether to use the ECR pull through cache for the deployed images" type = bool default = true}

variable "burstable_nodes_enabled" { description = "Whether to enable burstable nodes for the postgres cluster" type = bool default = true}

variable "namespace" { description = "Kubernetes namespace to deploy the resources into" type = string}

variable "vpa_enabled" { description = "Whether the VPA resources should be enabled" type = bool default = true}

variable "monitoring_enabled" { description = "Whether to add active monitoring to the deployed systems" type = bool default = false}

variable "enhanced_ha_enabled" { description = "Whether to add extra high-availability scheduling constraints at the trade-off of increased cost" type = bool default = true}

variable "panfactum_scheduler_enabled" { description = "Whether to use the Panfactum pod scheduler with enhanced bin-packing" type = bool default = true}

variable "pf_module_source" { description = "The source of the Panfactum modules" type = string}

variable "pf_module_ref" { description = "The reference of the Panfactum modules" type = string}Notice that our module has an input called image_version which is set in terragrunt.hcl as follows:

image_version = run_cmd( "--terragrunt-quiet", "pf-get-commit-hash", "--ref=main", "--repo=https://github.com/example-org/service")The run_cmd function is a Terragrunt feature that allows you to call an external command as your Terragrunt code is evaluated. The stdout from this call is then returned by the function.

We provide a command in the devShell called pf-get-commit-hash that takes a git reference and a repository URL and returns the commit hash that reference points to. By choosing main, our module will deploy the image corresponding to the latest commit on the main branch.

Before proceeding, ensure you can successfully deploy and run your service. Commit and push this new IaC so that we can pull it in the Workflows we create in the following step.

Combine WorkflowTemplates

Next, we must create a WorkflowTemplate the combines both the wf_dockerfile_build logic and the wf_tf_deploy logic (created in the Getting Started guide). This new WorkflowTemplate will reuse the existing WorkflowTemplates to first build the image and then deploy it.

This composition pattern is described in our guide for triggering Workflow and we provide an example below. The following snippets deserve an explanation:

module "demo_service_builder" { source = "${var.pf_module_source}wf_dockerfile_build${var.pf_module_ref}"

name = "service-builder" namespace = local.namespace

// The git repository where the source code and Dockerfile live code_repo = "github.com/example-org/service.git"

// The AWS ECR repo where the built images will be pushed image_repo = "demo-service"}

module "tf_deploy" { source = "${var.pf_module_source}wf_tf_deploy${var.pf_module_ref}"

name = "tf-deploy-prod" namespace = local.namespace

repo = "github.com/example-org/infrastructure.git" tf_apply_dir = "environments/production"}

module "build_and_deploy_service" { source = "${var.pf_module_source}wf_spec${var.pf_module_ref}"

name = "build-and-deploy-demo-service" namespace = local.namespace active_deadline_seconds = 60 * 60 workflow_parallelism = 10

passthrough_parameters = [ { name = "git_ref" value = "main" }, { name = "tf_apply_dir" value = "environments/production/us-east-2/service" } ]

entrypoint = "entry" templates = [ { name = "entry", dag = { tasks = [ { name = "build-image" templateRef = { name = module.demo_service_builder.name template = module.demo_service_builder.entrypoint } }, { name = "deploy-image" templateRef = { name = module.tf_deploy.name template = module.tf_deploy.entrypoint } depends = "build-image" } ] } } ]}

resource "kubectl_manifest" "build_and_deploy_service_workflow_template" { yaml_body = yamlencode({ apiVersion = "argoproj.io/v1alpha1" kind = "WorkflowTemplate" metadata = { name = module.build_and_deploy_service.name namespace = local.namespace labels = module.build_and_deploy_service.labels } spec = module.build_and_deploy_service.workflow_spec })

server_side_apply = true force_conflicts = true}Snippet 1

This code passes the git_ref and tf_apply_dir parameters to each reference template. For this Workflow, we want to build from the main branch and then apply only the pf_website module.

Snippet 2

This code combines templates from the WorkflowTemplates created by the wf_dockerfile_build and wf_tf_deploy submodules and then runs them in sequence. The entrypoint output of each submodule refer to the name of the entry / root template of that WorkflowTemplate. 1

Run the Combined Workflow

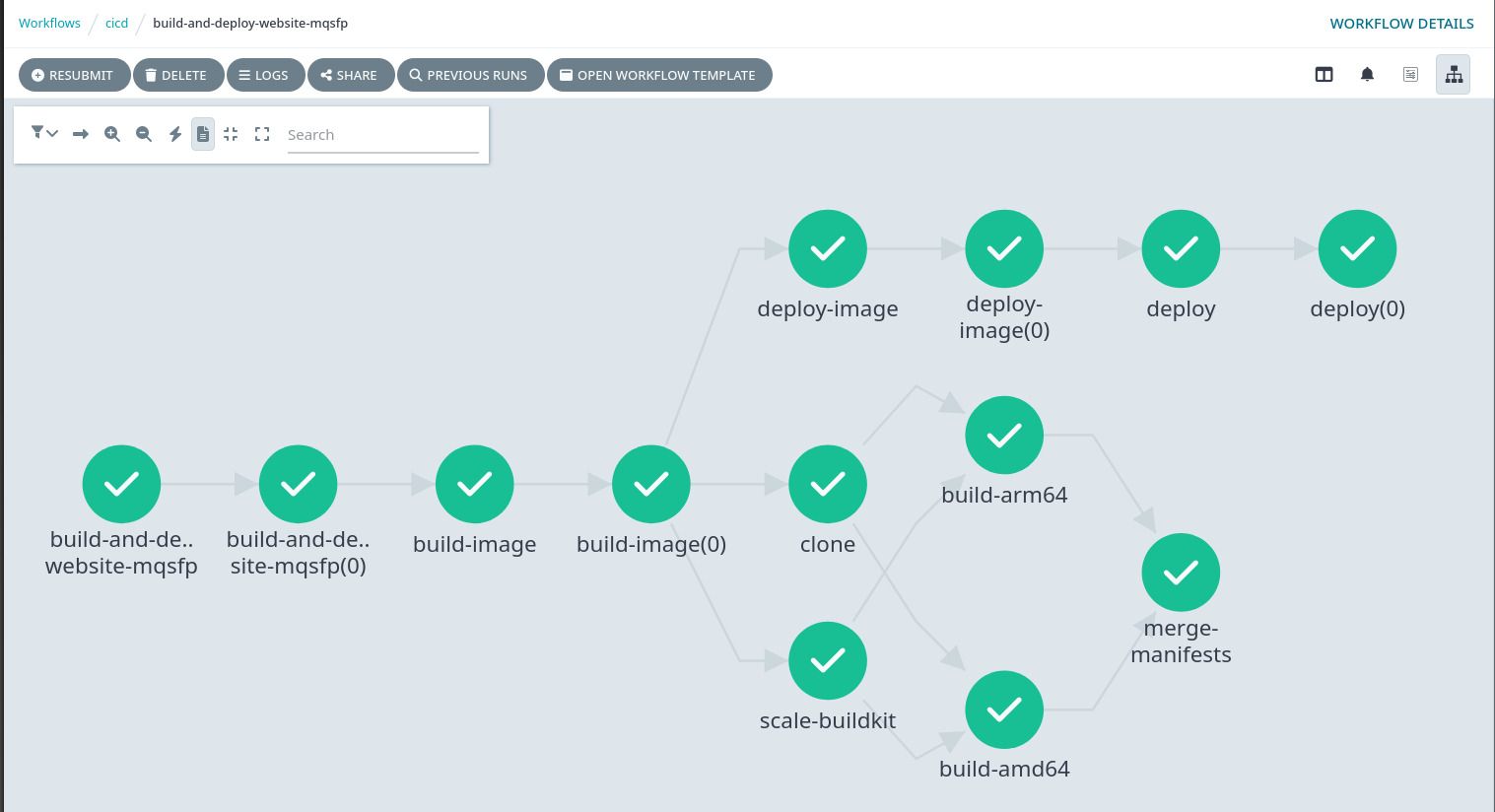

Once you have created an analogous WorkflowTemplate in your IaC, deploy it. You should now see it in the Argo web dashboard. If you create a new Workflow from the WorkflowTemplate, it should successfully run a combined execution graph of both the build and deploy Workflow:

Connect to CI / CD Webhooks

Finally, you can now connect this new WorkflowTemplate to the Argo Sensor for your CI / CD pipeline. This will allow you to trigger a new Workflow from this WorkflowTemplate whenever you push to main (or whichever branch you want to use to automatically trigger deployments).

Sensors can be somewhat cumbersome to configure, so we recommend reviewing the Argo documentation.

Here is an example that is configured to fire builds from GitHub webhook events:

module "sensor" { source = "${var.pf_module_source}kube_argo_sensor${var.pf_module_ref}"

name = "cicd" namespace = local.namespace

dependencies = [ { name = "push-to-main" eventSourceName = local.event_source_name eventName = "default" filters = { data = [ { path = "body.X-GitHub-Event" type = "string" value = ["push"] }, { path = "body.ref" type = "string" value = ["refs/heads/main"] } ] } } ]

triggers = [ { template = { name = module.build_and_deploy_service_workflow.name conditions = "push-to-main" argoWorkflow = { operation = "submit" source = { resource = { apiVersion = "argoproj.io/v1alpha1" kind = "Workflow" metadata = { generateName = module.build_and_deploy_service_workflow.generate_name namespace = local.namespace } spec = { workflowTemplateRef = { name = module.build_and_deploy_service_workflow.name } } } } } } } ]

depends_on = [module.event_bus]}