Policy Controller

Install the Kyverno policy engine which allows installing cluster-wide rules for automatically generating, mutating, and validating Kubernetes resources.

Background

Kyverno is a CNCF project which allows us to add many features to vanilla Kubernetes:

- Native integration with the pull through cache

- Support for arm64 and spot instances

- Support for our bin-packing pod scheduler

- Descriptive labels and automatically injected reflexive environment variables for pods

- Production-ready security and uptime hardening for your workloads

- and much more.

Additionally, Kyverno allows you to deploy your own policies that control what and how resources are deployed to your clusters.

Kyverno works by installing admission webhooks that allow Kyverno to receive, update, and even reject Kubernetes manifests before they are applied to the cluster. Kyverno’s behavior is configured through Kyverno Policies.

For a full architectural breakdown, see their excellent documentation.

Deploy Kyverno

We provide a module for deploying Kyverno, kube_kyverno.

Let’s deploy it now:

Create a new directory adjacent to your

aws_eksmodule calledkube_kyverno.Add a

terragrunt.hclto that directory that looks like this:include "panfactum" { path = find_in_parent_folders("panfactum.hcl") expose = true } terraform { source = include.panfactum.locals.pf_stack_source } dependency "cilium" { config_path = "../kube_cilium" skip_outputs = true } inputs = {}Run

pf-tf-initto enable the required providers.Run

terragrunt apply.If the deployment succeeds, you should see the various Kyverno pods running:

Deploy Panfactum Policies

While kube_kyverno installs Kyverno itself, Kyverno does not apply any policy rules by default. To load in the default Kyverno Policies, we provide a module called kube_policies.

Let’s deploy the policies now:

Create a new directory adjacent to your

kube_kyvernomodule calledkube_policies.Add a

terragrunt.hclto that directory that looks like this:include "panfactum" { path = find_in_parent_folders("panfactum.hcl") expose = true } terraform { source = include.panfactum.locals.pf_stack_source } dependency "kyverno" { config_path = "../kube_kyverno" skip_outputs = true } inputs = {}Run

pf-tf-initto enable the required providers.Run

terragrunt apply.You can verify that the policies are working as follows:

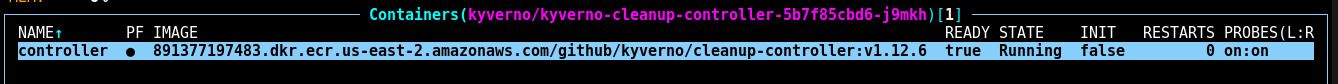

Examine the containers for any pod in the cluster (press

enterwhen selecting the pod in k9s):

Notice that the image is being pulled directly from the GitHub container registry (

ghcr.io) rather than from the pull-through cache.Delete the pod you just inspected (

ctrl+dwhen selecting the pod in k9s).Examine the containers for the new pod that gets created to take its place:

Notice that the image is now being pulled from your ECR pull-through cache. This occurred because our Kyverno policies dynamically replaced the images for the pod when it was created.

Pods are immutable after they are created. As a result, if you want these new policies to apply to all pods in your cluster, you must first delete the pods to allow them to be recreated. You do not need to do this now as we do this in a subsequent guide step.

Run Network Tests

This test takes awhile to complete, but please run it before continuing. If something is broken, it will break other components in non-obvious ways. Additionally, the fix will usually require re-provisioning your entire cluster.

Additionally, this test can be somewhat flaky. If the test fails the first time, try running it again.

Now that both networking and the policy controller are installed, let’s verify that everything is working as intended. The easiest approach is to perform a battery of network tests against the cluster to ensure that pods can both launch successfully and communicate with one another.

Cilium comes with a companion CLI tool that is bundled with the Panfactum devShell. We will use that to test that cilium is working as intended:

Run

cilium connectivity test --test '!pod-to-pod-encryption'. 1 2Wait about 20-30 minutes for the test to complete.

If everything completes successfully, you should receive a message like this:

✅ All 46 tests (472 actions) successful, 18 tests skipped, 0 scenarios skipped.Unfortunately, the test does not clean up after itself. You should run

kubectl delete ns cilium-testto remove the test resources.

Next Steps

Now that the policy engine and basic policies are deployed, let’s deploy storage controllers to allow your pods to utilize storage.

Footnotes

Skipping the pod-to-pod-encryption test is required due to this issue. ↩

If you receive an error like

Unable to detect Cilium version, assuming vX.X.X for connectivity tests: unable to parse cilium version on pod.that means that you tried to run the test while not allcilium-xxxxpods were ready. Wait for all thecilium-xxxxxpods to reach a ready state and then try again. ↩