Internal Cluster Networking

Install the basic Kubernetes cluster networking primitives via the kube_core_dns and kube_cilium modules.

Background

In the Panfactum framework, we use CoreDNS to handle cluster DNS resolution and Cilium to handle all the L3/L4 networking in our Kubernetes cluster.

In this guide, we won’t go into detail about the underlying design decisions and network concepts, so we recommend reviewing the concept documentation for more information.

Deploy Cilium

Cilium provides workloads in your clusters with network interfaces that allow them to connect with each other and the wider internet. Without this controller, your pods would not be able to communicate. We provide a module for deploying Cilium: kube_cilium.

Let’s deploy it now.

Deploy the Cilium Module

Create a new directory adjacent to your

aws_eksmodule calledkube_cilium.Add a

terragrunt.hclto that directory that looks like this:include "panfactum" { path = find_in_parent_folders("panfactum.hcl") expose = true } terraform { source = include.panfactum.locals.pf_stack_source } dependency "cluster" { config_path = "../aws_eks" skip_outputs = true } inputs = {}Run

pf-tf-initto enable the required providers.Run

terragrunt apply.If the deployment succeeds, you should see the various cilium pods running:

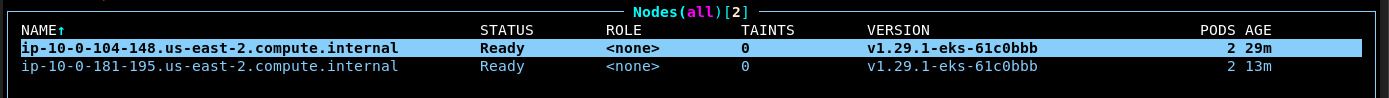

Additionally, all the nodes should now be in the

Readystate:

Deploy CoreDNS

Kubernetes provides human-readable DNS names for pods and services running inside the cluster (e.g., my-service.namespace.svc.cluster.local); however, it does not come with its own DNS servers. The standard way to provide this functionality is via CoreDNS. We provide a module to deploy CoreDNS called kube_core_dns.

Let’s deploy it now.

Deploy the CoreDNS Module

Create a new directory adjacent to your

aws_eksmodule calledkube_core_dns.Add a

terragrunt.hclto that directory that looks like this:include "panfactum" { path = find_in_parent_folders("panfactum.hcl") expose = true } terraform { source = include.panfactum.locals.pf_stack_source } dependency "cluster" { config_path = "../aws_eks" } inputs = { service_ip = dependency.cluster.outputs.dns_service_ip }Run

pf-tf-initto enable the required providers.Run

terragrunt apply.If the deployment succeeds, you should see a

core-dnsdeployment with either 1/2 or 2/2 pods running:

If you see only 1/2 pods running, that is because we force the CoreDNS pods to run on nodes with different instance types for high-availability. However, the cluster won’t be able to dynamically provision the new instance types until you complete the autoscaling section of the bootstrapping guide. Once you complete that guide section, you will see that both CoreDNS pods have launched successfully. This should not have any impact in the interim.

Next Steps

Now that basic networking is working, we will configure a policy engine for the cluster.